What is a reverse proxy server

Interesting detail about the modern internet. When you visit Amazon or watch a series on Netflix, there's a complex system of reverse proxy servers working between you and the content. You don't see it, but it's there.

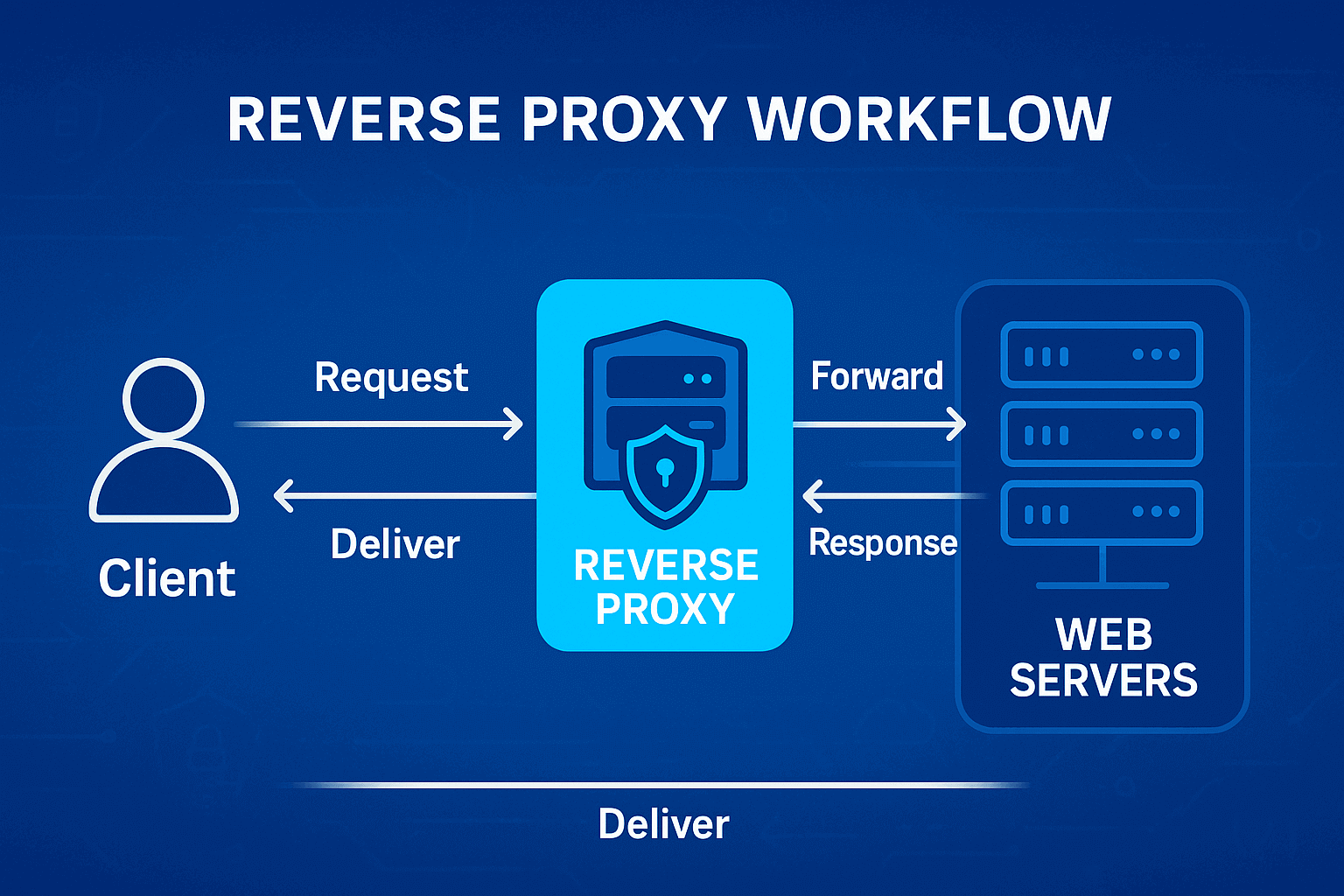

What's a reverse proxy in technical terms? It's software that receives HTTP(S) requests and decides which backend server to forward them to. Sounds simple, right? But there's a lot going on inside. Take connection pooling — the proxy creates persistent connections with backend servers and then reuses them for different users. No need to establish a new TCP connection every time, the time savings are huge.

And why is it called "reverse" anyway? A regular proxy hides the client from the server. A reverse proxy does the opposite — it hides the server from the client. To the user it looks like one server, though behind the scenes there could be a farm of hundreds of machines.

Forward and reverse proxy – what's the difference

How does a forward proxy work? You go into your browser settings, find the proxy settings. You enter a server address, say 192.168.1.100, specify port 8080. Done. Now the browser will go online not directly, but through this server. For websites you're now at the proxy server's address, not your home IP. Many people bypass geographic blocks this way.

A reverse proxy works from the opposite side. You type site.com, DNS returns IP 1.2.3.4. But that's not the server with the website, it's a reverse proxy. It receives the request, looks at its settings. Sees routing rules. Everything starting with /api/* goes to server A, images /images/* to CDN, everything else to server B.

The key difference is control. You set up a forward proxy yourself as a user. The site owner sets up the reverse proxy. A forward proxy can cache data to save your traffic. A reverse proxy caches to lighten the load on its servers.

How a reverse (reverse) proxy works

Let's break down a real request step by step. You typed example.com/products/123. The browser does a DNS lookup, gets 5.6.7.8. That's nginx configured as a reverse proxy. Sends there GET /products/123 HTTP/1.1.

Nginx parses the request. Checks location blocks in the config. Finds a match with location /products. It says proxy_pass http://products_backend. That's an upstream group of three servers. Nginx selects a server by round-robin (or another algorithm), say 10.0.0.2 port 5000.

Opens a connection to 10.0.0.2 on port 5000 (or takes from the keepalive pool). Modifies headers, adds X-Real-IP, X-Forwarded-For, changes Host. Sends the request. Gets a response. May cache it if configured. Returns to the client.

Why you need a reverse proxy

The first reason is horizontal scaling. You have a Rails application. One process handles 50 req/sec. Need 500 req/sec? You run 10 processes (can be on different machines), put nginx in front of them. Done, without changing code.

The second reason is related to specialization. Nginx serves static files more efficiently than Ruby/Python/PHP. You configure location /static with sendfile on, tcp_nopush on. Static files fly, the application isn't bothered.

The third reason concerns a single point for cross-cutting concerns. CORS headers, rate limiting, gzip compression, SSL termination. All on the proxy. Backends stay simple, only deal with business logic.

Contents

- What an Anonymous Proxy Service Represents

- How an Anonymous Proxy Works

- Proxy Anonymity Levels

- Types of Anonymous Proxies

- Main Benefits of Using Anonymous Proxies

- Risks and Limitations

- How to Set Up and Use an Anonymous Proxy

- Solving the "Anonymous Proxy Detected" Error

- How to Choose a Reliable Anonymous Proxy Service

- FAQ

- Conclusion

- VPN and Proxy: Key Differences

- What is a Proxy Server?

- What is VPN?

- Security and Privacy

- Speed and Performance

- When Should You Use a Proxy Server?

- When Should You Use VPN?

- Is It Worth Using VPN and Proxy Together?

- Mistakes When Choosing Between VPN and Proxy

- Busting Myths About VPN and Proxy

- VPN or Proxy: How to Choose the Right Option?

- FAQ

- Conclusion

- What is a proxy server for Google Chrome and why do you need it

- How proxy works in Chrome browser

- Ways to configure proxy in Google Chrome

- Setting up proxy in Google Chrome through Windows

- Setting up proxy in Google Chrome through macOS

- Setting up proxy for Chrome through extensions

- Setting up proxy in Chrome on Android

- Setting up proxy in Chrome on iPhone and iPad

- Connection check and speed test

- Typical errors when working with proxy in Chrome

- FAQ

- Conclusion

- Why you need proxies for Reddit

- Why Reddit might be blocked

- What restrictions does Reddit have

- Who needs proxies and how they help

- What you can do with proxies for Reddit

- How to choose the right type of proxy for Reddit

- Proxy vs VPN for Reddit

- How to set up and use proxies for Reddit

- Top proxy providers for Reddit in 2025

- Common problems and solutions

- Practical use case scenarios

- FAQ

- Conclusion

- Why LinkedIn requires using proxies

- How proxies help in working with LinkedIn tools

- Types of proxies for LinkedIn and selection criteria

- 10 best proxy providers for LinkedIn

- Setting up and using proxies

- Tips for safe LinkedIn outreach scaling

- FAQ

- Conclusion: how to build a stable system for working with LinkedIn through proxies

- How Amazon detects and blocks proxies

- Benefits of using proxies for Amazon

- Which proxy types work best for Amazon

- Best residential proxy providers for Amazon (2025)

- Key features of a good Amazon proxy provider

- How to set up a proxy for Amazon

- Common problems when working with proxies on Amazon

- How to use Amazon proxies for different tasks

- Best practices for safe Amazon proxy usage

- FAQ

- Conclusion – choose stability, not quantity

- Step 1 — Download and Install VMLogin

- Step 2 — Create a New Browser Profile

- Step 3 — Get Your Gonzo Proxy Credentials

- Step 4 — Configure Proxy Settings in VMLogin

- Step 5 — Verify Proxy Connection

- Step 6 — Launch Your Browser Profile

- Step 7 — Optional: Set Up Multiple Profiles / Rotating Sessions

- Step 8 — Troubleshooting Common Issues

- Step 9 — Start Automating with Gonzo Proxy + VMLogin

- What is an anonymizer in simple terms

- How anonymizers differ from proxies and VPNs

- How an anonymizer works

- Types of anonymizers and anonymity levels

- How to format proxies for working with anonymizers

- How to use an anonymizer to access blocked sites

- Advantages and risks of using anonymizers

- How to choose an anonymizer or proxy for your tasks

- FAQ

- Conclusion

- How to sell quickly and effectively on Avito

- What is mass posting on Avito and why you need it

- Manual and automated mass posting

- Multi-accounting: how to manage multiple accounts on Avito

- Step-by-step launch plan

- How not to get banned with mass posting and multi-accounting

- Mass posting vs alternative sales methods

- FAQ

- Conclusion

- Why TikTok gets blocked and doesn't always work with VPN

- How proxies and VPN differ for TikTok

- When it's better to choose VPN for TikTok

- When it's better to choose proxy for TikTok

- How to set up proxy for TikTok (short instruction)

- Risks and precautions when working with TikTok through VPN and proxies

- FAQ

- Conclusion

- What does transparent proxy mean

- How transparent proxy works in a real network

- Spheres of application for "invisible" proxy

- Advantages and disadvantages of transparent proxy

- Setting up transparent proxy: step by step

- Are transparent proxies secure

- Popular solutions for transparent proxy setup

- Checklist for working with transparent proxies

- FAQ

- Conclusion

- Why proxies are a must for Dolphin Anty

- Types of proxies you can connect to Dolphin

- Rotating vs Static

- Step-by-Step: How to Add a Proxy in Dolphin Anty

- Common proxy connection errors and fixes

- How to choose reliable proxies for Dolphin Anty

- Tips for optimizing costs

- Practical cases of using Dolphin Anty with proxies

- FAQ

- Final thoughts

- Types of proxies used for parsing

- How to choose proxies for parsing

- Setting up and rotating proxies for parsing

- Technical tricks for bypassing blocks

- Practice: building a proxy pool for parsing

- Metrics and monitoring parsing quality

- Best practices and ready solutions

- FAQ

- Summary: Which proxy to choose for parsing

- How proxies work in traffic arbitrage

- Types of proxies for arbitrage and their features

- What problems do proxies solve in arbitrage

- Top proxy providers for arbitrage in 2025

- Comparison table of proxy providers

- How to pick the right proxies for arbitrage

- How to set up proxies for arbitrage

- Safe proxy usage tips

- FAQ

- Conclusion

- What are residential proxies needed for?

- How do residential proxies work?

- How do residential proxies differ from others?

- Connecting residential proxies from GonzoProxy

- Examples of using GonzoProxy residential proxies in Python

- Pros and cons of residential proxies

- How to check residential proxies

- Common usage errors

- FAQ

- Conclusion

- Why does Facebook often block accounts and cards?

- Why use a virtual card for Facebook Ads?

- Multicards.io — a trusted virtual card service for Meta Ads

- Should you buy or create Facebook ad accounts?

- Why proxies are essential

- What kind of proxies are best for Facebook Ads?

- GonzoProxy — premium residential proxies for Facebook Ads

- How to safely link a virtual card to Facebook Ads

- Final recommendations

- What’s a DePIN farm and why should you care?

- So, what exactly is DePIN?

- Other DePIN projects already killing it

- What do you need to start your DePIN farm?

- How to create profiles and set up the antidetect browser

- How to choose and set up a proxy?

- What about Twitter/X accounts?

- How to get email accounts?

- Before you launch — one last tip!

- How to properly chain your accounts?

- How modern fraud detection systems track violators

- Google Ads: anti-fraud specifics in 2025

- How to tell your proxies aren't working?

- Why most proxies no longer work with ad platforms

- How to select and verify proxies for ad platforms

- Strategy for stable operation with ad platforms

- Conclusion: don't skimp on infrastructure

Load balancing

The simplest case is round-robin. Requests go in a circle. First to server1, second to server2, third to server3, fourth back to server1. In nginx it looks like this.

upstream backend {

server 10.0.0.1:5000;

server 10.0.0.2:5000;

server 10.0.0.3:5000;

}But round-robin is dumb. Doesn't account that server1 might be overloaded while server2 is idle. That's why there's least_conn. A new request goes where there are fewer active connections.

upstream backend {

least_conn;

server 10.0.0.1:5000;

server 10.0.0.2:5000;

}Even cooler to use weights. You have server1 with 32GB RAM, server2 with 8GB. Makes sense to send 4 times more to the first one.

upstream backend {

server 10.0.0.1:5000 weight=4;

server 10.0.0.2:5000 weight=1;

}Caching and content delivery acceleration

Cache in nginx is a separate dissertation topic. Basic configuration looks like this.

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my_cache:10m max_size=1g inactive=60m;

server {

location / {

proxy_cache my_cache;

proxy_cache_valid 200 1h;

proxy_cache_valid 404 1m;

proxy_pass http://backend;

}

}The levels=1:2 parameter means a two-level folder structure. Otherwise there'll be a million files in one directory. The file system will choke.

keys_zone designates an area in shared memory for storing cache keys.

max_size sets the maximum size on disk.

The trick is in proxy_cache_use_stale. Backend crashed? Nginx will serve stale cache.

proxy_cache_use_stale error timeout http_500 http_502 http_503 http_504;HTTPS encryption and security

SSL/TLS handshake eats CPU like crazy. RSA 2048 bit requires 0.5ms per handshake on a modern CPU. With 1000 new connections per second, half a core goes just to cryptography.

Reverse proxy https solves the problem. SSL terminates at nginx, then plain HTTP goes on.

server {

listen 443 ssl http2;

ssl_certificate /etc/ssl/certs/cert.pem;

ssl_certificate_key /etc/ssl/private/key.pem;

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 10m;

location / {

proxy_pass http://backend;

}

}The ssl_session_cache parameter is critically important. Without it every connection requires a full handshake. With it, session resumption happens, 10 times faster.

Masking internal infrastructure

Backends can be on anything. You have a legacy PHP4 application? Hide it behind nginx, no one will know. API on Go, admin panel on Django, reports on .NET? From the outside looks like a single service.

Headers can be cleaned up. Backend returns Server Apache/2.2.15 (CentOS)? You overwrite it.

proxy_hide_header Server;

add_header Server "nginx";And if you're working with IP-demanding platforms, you'll have to think about the quality of outgoing connections. Specialized services like GonzoProxy help here. They have 20+ million verified residential IPs that don't get flagged as proxies. Especially relevant for parsing and multi-accounting.

For web application protection

Rate limiting saves from dumb brute force. Someone's hammering POST /login? We limit.

limit_req_zone $binary_remote_addr zone=login:10m rate=5r/m;

location /login {

limit_req zone=login burst=5 nodelay;

proxy_pass http://backend;

}5 requests per minute per IP. The burst=5 parameter allows exceeding, but no more than 5 requests. nodelay means don't delay, immediately return 503.

ModSecurity (WAF for nginx) filters absolutely everything. SQL injections, XSS, path traversal. Out-of-the-box rules catch 90% of typical attacks. But false positives happen, needs tuning.

For scalability and fault tolerance

Adding a server to nginx is simple. Add one line and reload.

upstream backend {

server 10.0.0.1:5000;

server 10.0.0.2:5000;

server 10.0.0.3:5000; # new

}No downtime. Nginx will reread the config, old workers will finish current requests and die, new ones will start with the new config.

Health checks in the open source version of nginx are primitive. Only passive. Server didn't respond max_fails times in a row, excluded for fail_timeout seconds. Nginx Plus has active health checks, but that's paid.

For corporate network optimization

Single entry point for all services. Instead of a dozen DNS A-records you get one. Instead of a dozen SSL certificates you use one wildcard. Instead of firewall setup for each service you only configure for the proxy.

Authentication can be moved to the proxy. Use nginx with the auth_request module.

location /internal/ {

auth_request /auth;

proxy_pass http://internal_service;

}

location = /auth {

internal;

proxy_pass http://auth_service/verify;

}By the way, for distributed teams with lots of external integrations, quality proxies like GonzoProxy become a must-have. When you're hitting partner APIs 1000 times per minute, residential IPs with low fraud scores are critical.

Nginx as reverse proxy

Event-driven architecture is nginx's killer feature. One worker process through epoll (Linux) or kqueue (BSD) handles thousands of connections. Apache under load forks processes and eats gigabytes of RAM. Nginx works in 100 MB.

Nginx config reads like DSL. Nested blocks, directive inheritance. Location blocks match by priority. First exact (=), then regexes (~), then prefixes.

location = /api/v1/status { # exact match, maximum priority

return 200 "OK";

}

location ~ \.php$ { # regex, medium priority

proxy_pass http://php_backend;

}

location /static/ { # prefix, low priority

root /var/www;

}Apache as reverse proxy

Apache with mod_proxy works differently. Process-based model (prefork MPM) or thread-based (worker MPM). Each request gets a separate process/thread. Memory hungry, but isolation is better.

Configuration through .htaccess is a double-edged sword. Convenient for shared hosting, but performance hit on every request. Apache rereads .htaccess files along the entire path.

<VirtualHost *:80>

ProxyPreserveHost On

ProxyPass / http://127.0.0.1:8080/

ProxyPassReverse / http://127.0.0.1:8080/

</VirtualHost>ProxyPreserveHost is important. Without it backend will get Host 127.0.0.1 instead of the original domain.

Setting up reverse proxy in Windows environment

IIS with ARR (Application Request Routing) is the standard for Windows. GUI for configuration helps those who fear the console. URL Rewrite module is quite powerful, supports regexes.

<system.webServer>

<rewrite>

<rules>

<rule name="ReverseProxy" stopProcessing="true">

<match url="(.*)" />

<action type="Rewrite" url="http://backend/{R:1}" />

</rule>

</rules>

</rewrite>

</system.webServer>The problem with IIS is performance. On the same hardware nginx will handle 5-10 times more requests.

Prerequisites (server, access, basic knowledge)

VPS/VDS minimum is 1 CPU, 512 MB RAM for tests. For production take 2+ CPU, 2+ GB RAM. SSD is mandatory if you're planning caching. Random I/O on HDD will kill performance.

You need root or sudo access. Without this nginx won't start on ports 80/443 (privileged). You can start on 8080, but then you'll have to specify the port in the URL.

Commands that will definitely come in handy. ss -tulpn will show which ports are listening. journalctl -u nginx will output systemd unit logs. nginx -T will show the full configuration including includes. curl -I will check response headers.

Setting up reverse proxy in Nginx (configuration example)

Installing nginx. For Ubuntu/Debian use the command apt update && apt install -y nginx.

For RHEL/CentOS/Rocky run yum install -y epel-release && yum install -y nginx.

Config structure in Debian-based is like this. The /etc/nginx/sites-available/ folder contains configs, /etc/nginx/sites-enabled/ contains symlinks to active ones. In RHEL-based all configs are in /etc/nginx/conf.d/ with .conf extension.

Writing config /etc/nginx/sites-available/myapp.

upstream app_backend {

keepalive 32; # keep 32 connections open

server 127.0.0.1:3000 max_fails=2 fail_timeout=10s;

server 127.0.0.1:3001 max_fails=2 fail_timeout=10s backup; # backup server

}

server {

listen 80;

server_name myapp.com;

# Buffer sizes are important for performance

client_body_buffer_size 128k;

client_max_body_size 10m;

proxy_buffer_size 4k;

proxy_buffers 32 4k;

location / {

proxy_pass http://app_backend;

proxy_http_version 1.1; # for keepalive

proxy_set_header Connection ""; # for keepalive

# Pass real client IP

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $host;

# Timeouts

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

}

# Serve static directly

location ~* \.(jpg|jpeg|gif|png|css|js|ico|xml)$ {

expires 30d;

add_header Cache-Control "public, immutable";

root /var/www/static;

}

}Activate with commands ln -s /etc/nginx/sites-available/myapp /etc/nginx/sites-enabled/ and then nginx -t && systemctl reload nginx.

Setting up reverse proxy for HTTPS

Certbot is the simplest way to get a free SSL from Let's Encrypt. Install snap install --classic certbot.

Then run certbot --nginx -d myapp.com -d www.myapp.com --email admin@myapp.com --agree-tos --no-eff-email.

Certbot will add SSL settings to the config itself. But it's better to tweak some parameters.

# Modern protocols and ciphers

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256;

ssl_prefer_server_ciphers off;

# OCSP stapling speeds up certificate verification

ssl_stapling on;

ssl_stapling_verify on;

# HTTP/2 for performance

listen 443 ssl http2;

Checking and testing operation

Test config before applying. This is sacred. Run nginx -t.

If you see "syntax is ok", you can reload. "test failed" means read what's wrong.

Check what's listening with command ss -tulpn | grep nginx. Should see ports 80 and 443.

curl for checking works like this. For HTTP use curl -I http://myapp.com. For HTTPS run curl -I https://myapp.com. Follow redirects via curl -IL http://myapp.com. With custom header check curl -H "X-Test: 123" http://myapp.com.

Debug logs watch with command tail -f /var/log/nginx/access.log for general log. Errors are visible in tail -f /var/log/nginx/error.log. Filter by IP via tail -f /var/log/nginx/access.log | grep "192.168".